Aya: Body Tracking Interactive Game Using EEG

Brain Hacking, Interaction Design

Project Time: 2016

A project during OpenBCI: Brain Hacking course in MFA Design and Technology program at Parsons School of Design taught by Conor Russomanno, the founder of OpenBCI

Published on the OpenBCI community: https://openbci.com/community/openbci-game-aya/

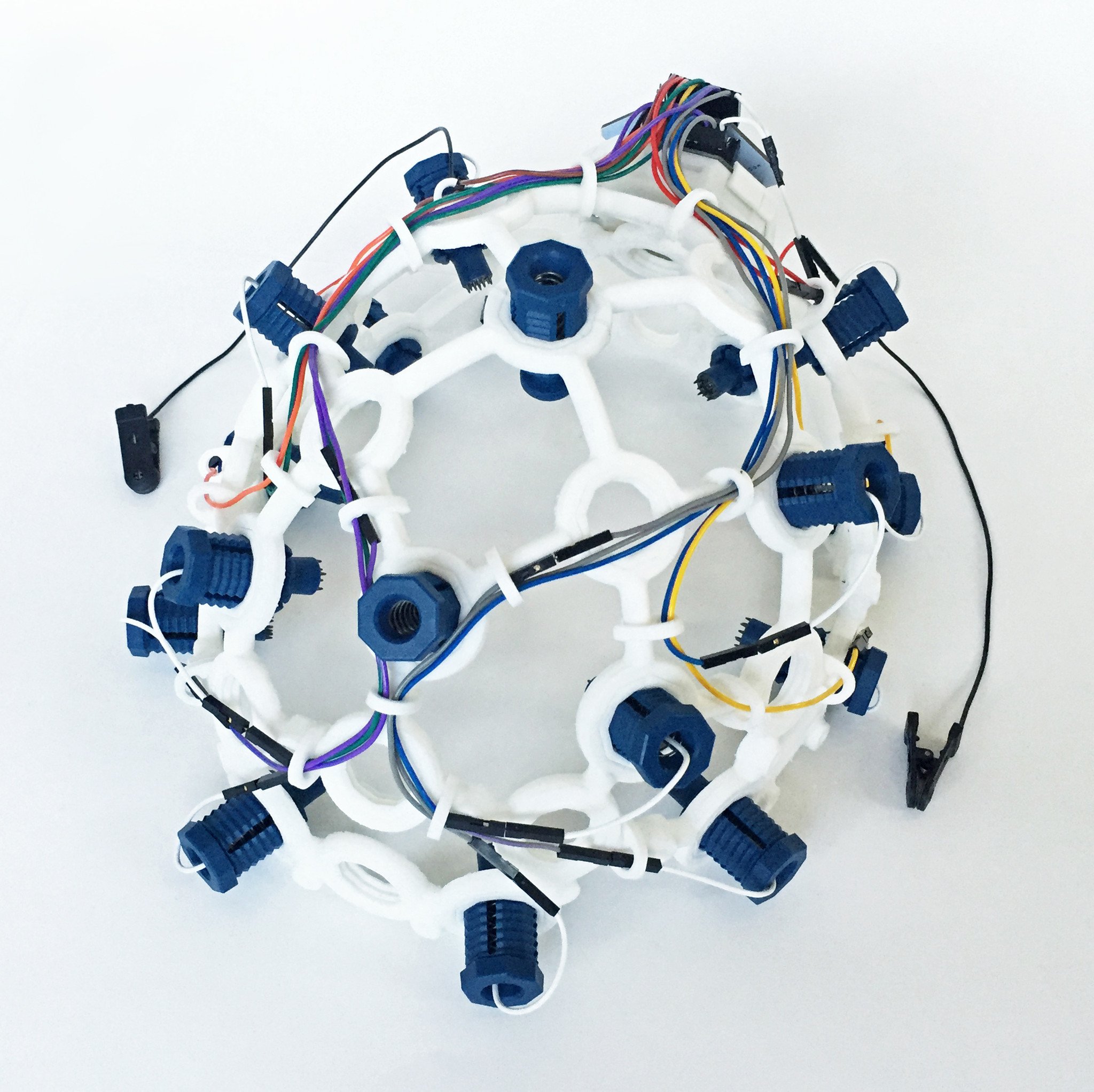

Collaborated with Danli Hu, Xianghan Ma, and Yi Zhu, we developed an interactive game controlled by the bio-tracking EEG data with OpenBCI sensors and a 3d-printed brain tracking headset developed by the OpenBCI lab.

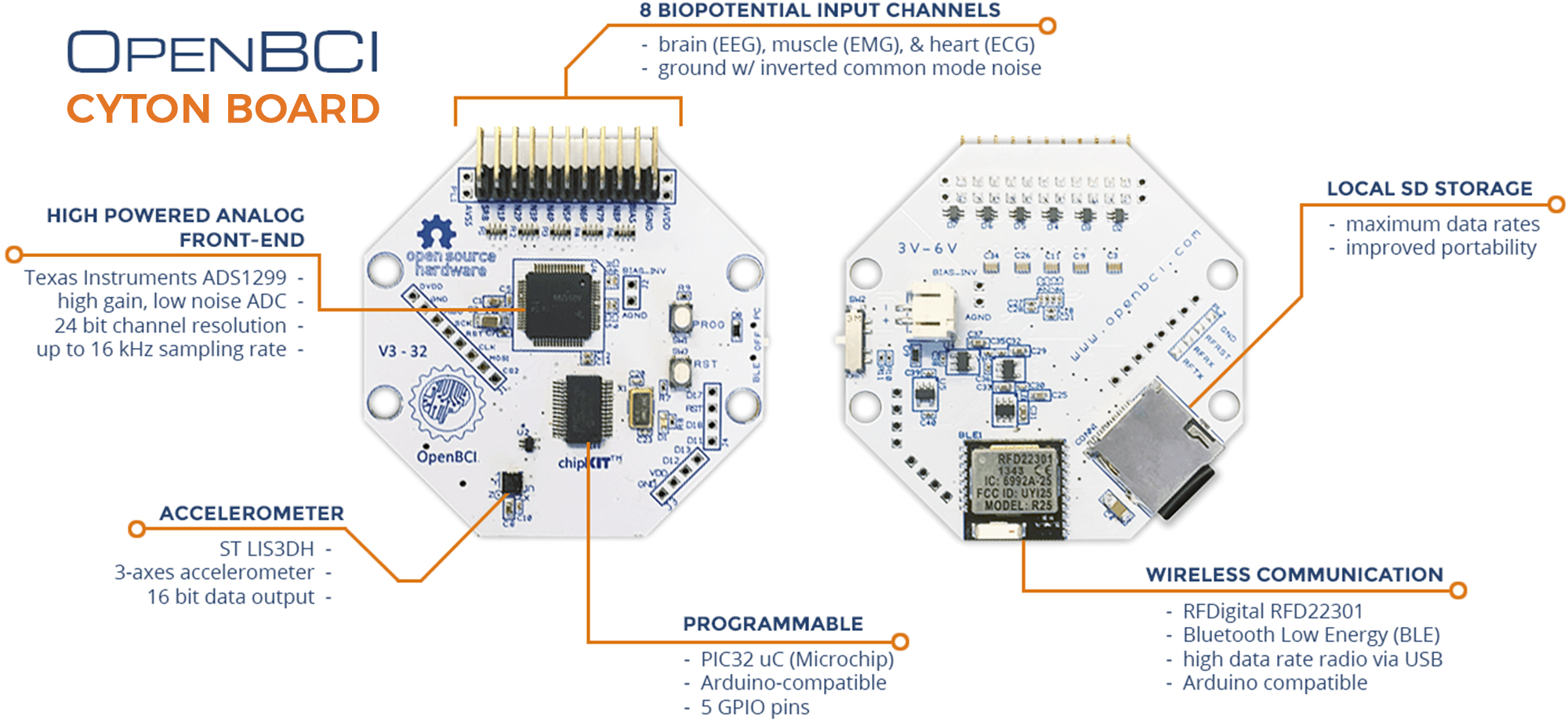

OpenBCI is an open-source platform that develops bio-sensing hardware for brain-computer interfacing. In OpenBCI: Brain Hacking Collaboration Lab led by the founder of OpenBCI, we used their Arduino compatible biosensing boards to track EMG, ECG, and EEG signals from our body muscle movements to control the interactive game experience.

The player controls the game interaction with their body movement tracked by the OpenBCI muscle sensing board.

How it works

We connected the OpenBCI headset and Arduino with the OpenBCI Processing library to control the 2D game interaction by tracking muscle data. Below is the demo game that interacts with the wearer's body muscle movement data.

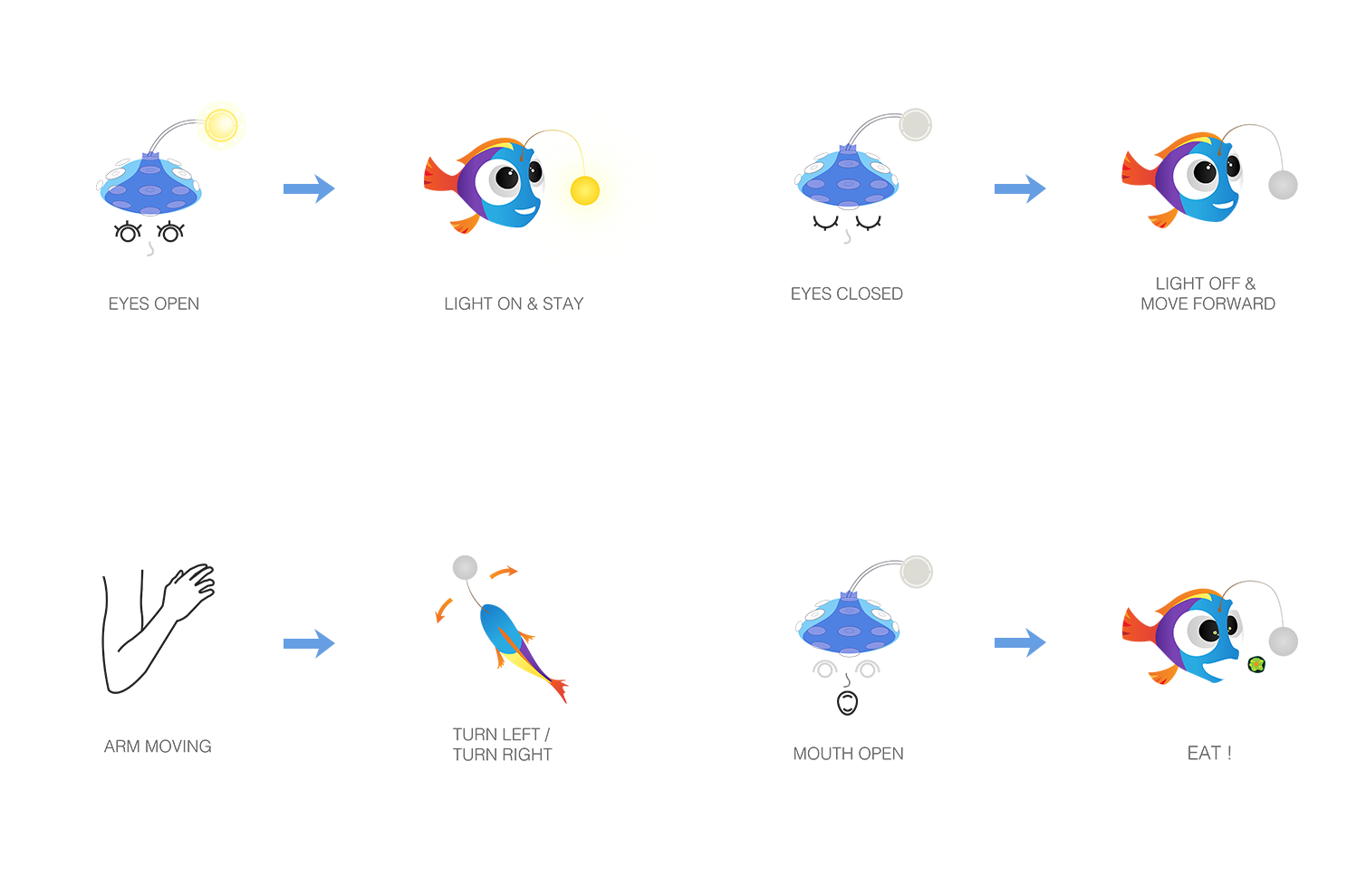

When a user puts on the EEG Electrode Caps (3D-printed head gadget), It tracks the user’s eye blink and arm movement and the user can control the game character with their body movement.

Interactive Prototype

User Interaction

Technology

OpenBCI CYTON Board to track muscle activities (Arduino) + Processing (Java)

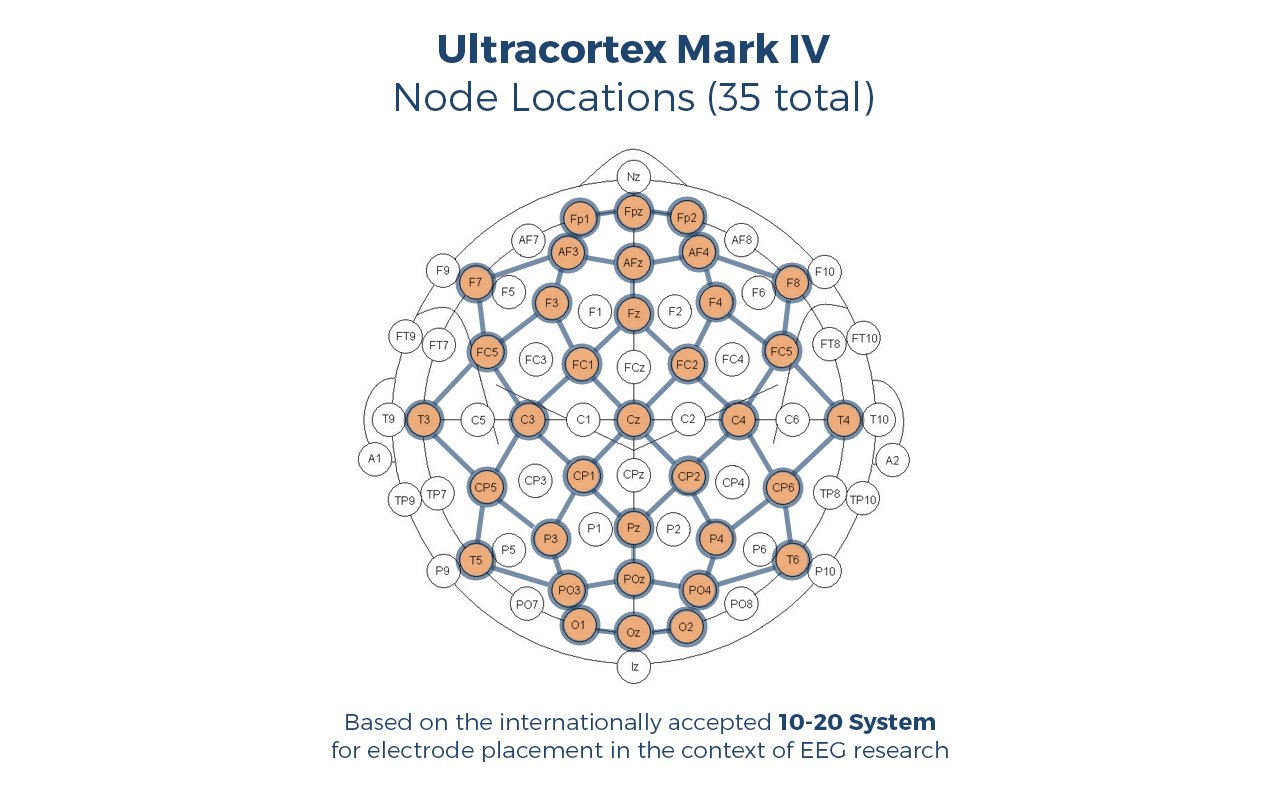

The Ultracortex is an open-source, 3D-printable headset intended to work with any OpenBCI Board. It is capable of recording research-grade brain activity (EEG), muscle activity (EMG), and heart activity (ECG). We connected The Ultracortex Mark IV to our processing game to control the game by our muscle activity.